Philipp Trusheim, M. Sc.

Erstbetreuer: C. Heipke; Co-Betreuer: C. Brenner

Positioning is one of the main tasks in navigation. Due to the developments in autonomous driving, a constant and reliable position becomes more and more important.

In this dissertation project, we investigate how to best combine original sensor data (e.g. grey values), variables derived from them (e.g. image features) and relative poses for integral, collaborative positioning with GNSS and image sensors in dynamic sensor networks in which the nodes recognize each other and exchange information about their state.

The basis of the project are approaches of photogrammetric point determination by means of bundle adjustment, which are to be extended appropriately. The architecture of the overall solution (centralized vs. decentralized) and the basic determinability of a solution including its quality must be considered even under restricted conditions. Using the digital map, it has to be investigated, based on simulations and real data for different scenarios of the "Experimentierstube", e.g. a busy road with GNSS shading, to what extent the collaboration will enable a sufficiently accurate and reliable positioning even in areas where no sufficient GNSS information is available.

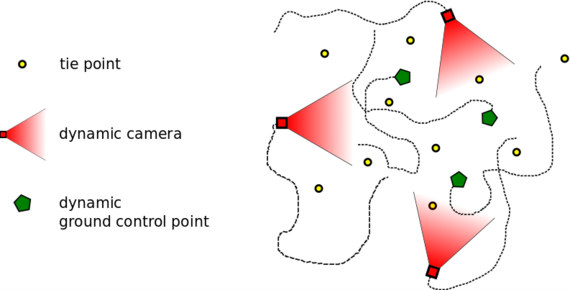

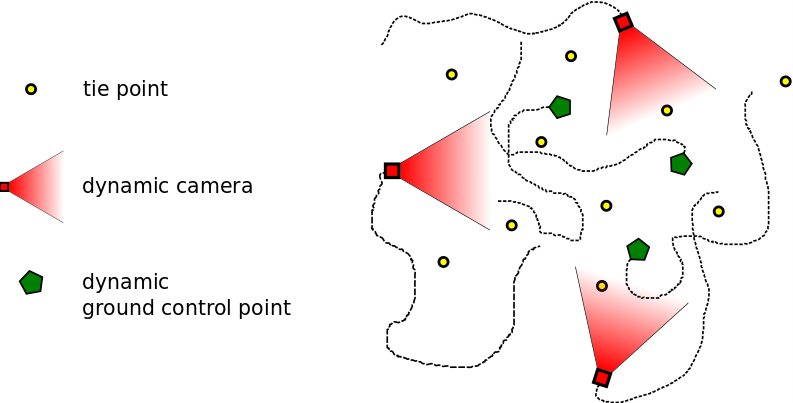

The sensor network distinguishes three different types of nodes, illustrated in the figure below

Dynamic cameras: These are the used imaging sensors; their exterior orientation is modeled as a set of six time-depended functions.

Dynamic GCPs: These are points with 3D coordinates known in the global coordinate system moving in the scene; their 3D coordinates depended on time.

Static tie points: Tie points conncet the individual images; currently we only use static points. Their 3D coordinates are unknown.

Nienburger Straße 1

30167 Hannover